An Introduction to Reinforcement learning & its methods.

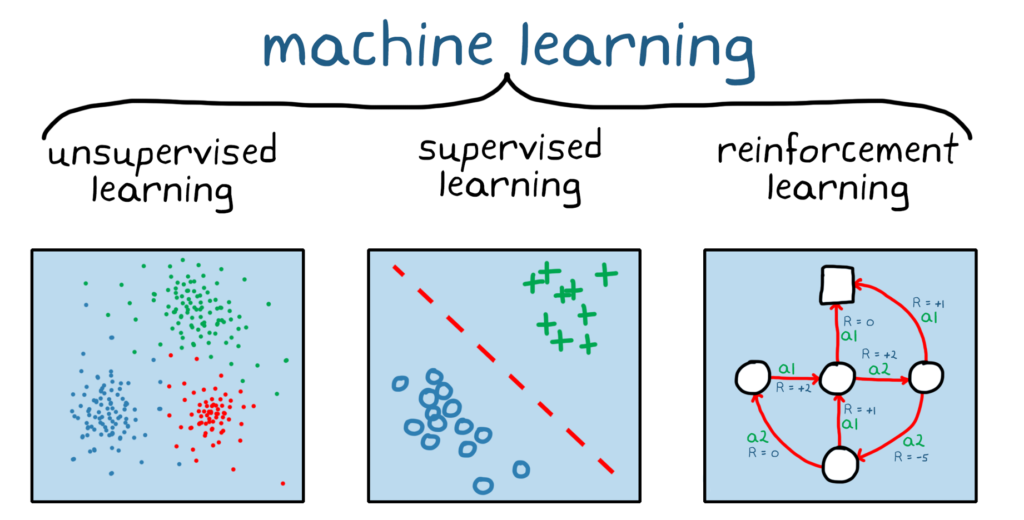

We previously discussed Supervised Machine Learning and Unsupervised Machine Learning, and now it’s time to look deeper into Reinforcement Learning. Being familiar with machine learning gives us an idea of what reinforcement learning is about, but if you’re new to this field or have never truly understood what it means, we’d like to explain it further in this article.

What is Reinforcement learning?

Reinforcement learning is the process of training machine learning algorithms to make diverse decisions. Through reinforcement learning, the machine interacts with a challenging environment in order to attempt a state in which it learns from interactions with the environment and shapes itself based on how the environment responds. This is similar to children exploring the world and learning the actions that will help them achieve a specific life goal.

The difference between Supervised, Unsupervised & Reinforcement learning?

Knowing that both supervised and reinforcement learning methods use mapping between input and output, it is crucial to highlight that, unlike supervised learning, where the agent receives feedback in the form of the correct set of actions to perform a task, reinforcement uses rewards and punishments as a sign for positive and negative behavior. When comparing reinforcement learning and unsupervised learning, the difference is in the goals that these methods attempt to achieve. As discussed in our article, unsupervised learning seeks similarities and differences between data points, whereas reinforcement learning seeks to find a suitable action model that maximizes the agent’s rewards.

Types of reinforcement learning

There are two types of reinforcement learning methods: positive reinforcement and negative reinforcement.

- Positive reinforcement learning is the process of encouraging or adding something when an expected behavior pattern is displayed to increase the probability of the same behavior being repeated. For example, a parent gives his children praise (reinforcing stimulus) for doing homework (behavior).

- Negative reinforcement involves increasing the chances of specific behavior to occur again by removing the negative condition. For example, when someone presses a button (behavior) that turns off a loud alarm (aversive stimulus).

Elements of reinforcement learning

Aside from the agent and the environment, reinforcement learning requires four critical components: policy, reward signal, value function, and model.

Policy.

A policy describes how an agent behaves at a given point in time. In the simplest cases, the policy can be a simple function or a lookup table, but it can also involve complex function computations. The policy is at the heart of what the agent observes.

Reward signal.

The agent receives an instant signal from the environment known as the reward signal or simply reward at each state. As previously stated, rewards can be positive or negative depending on the actions of the agent.

Value function.

The value function indicates how helpful specific actions are and how much reward the agent can expect. The value function is affected by the agent’s policy and the reward, and its goal is to estimate values in order to maximize rewards.

What are some of the most used Reinforcement Learning algorithms?

Reinforcement learning algorithms are mostly used in AI applications and gaming applications and can be classified into two: model-free RL algorithms and model-based RL algorithms. Q-learning and deep Q learning are examples of model-free RL algorithms.

Q-Learning.

Q-learning is an Off policy RL algorithm for temporal difference Learning. The temporal difference learning methods compare temporally successive predictions. It learns the value function Q (S, a), which means how good to take action “a” at a particular state “s.”

The below flowchart explains the working of Q- learning:

Monte Carlo Method.

The Monte Carlo (MC) method is one of the best ways for an agent to find the best policy in order to maximize the cumulative reward. This method is only applicable to story driven tasks, which have a definite end.

The agent learns directly from episodes of experience using this method. This also implies that the agent has no idea which action will result in the highest reward at the start, so the actions are chosen at random. After selecting a large number of random policies, the agent will become aware of the policies that result in the highest rewards and will become more adept at policy selection.

SARSA

State-action-reward-state-action (SARSA) is an on-policy temporal difference learning method. This means that it learns the value function based on the current action, which is derived from the current policy.

SARSA reflects the fact that the main function used to update the Q-value is determined by the agent’s current state (S), the action chosen (A), the reward for the action (R), the state the agent enters after performing the action (S), and the action performed in the new state (S) (A).

Deep Q Neural Network (DQN)

DQN, as the name implies, is a Q-learning algorithm that employs neural networks. Defining and updating a Q-table in a large state space environment will be a difficult and complex task. A DQN algorithm can be used to solve such an issue. Instead of defining a Q-table, the neural network estimates the Q-values for each action and state.

Some of the real-world applications of Reinforcement Learning

- RL is used in Robotics such as: Robot navigation, Robo-soccer;

- RL can be used for adaptive control such as factory processes, admission control in telecommunication;

- RL can be used in Game playing such as tic-tac-toe, chess, etc.

- In various automobile manufacturing companies, the robots use deep reinforcement learning to pick goods and put them in some containers.

- Business strategy planning, Data processing, Recommendation systems.